The process of evaluating solid state drives (SSDs) for enterprise applications can present a number of challenges. You want maximum performance for the most demanding servers running mission-critical workloads.

We sat down with Scott Hamilton, Senior Director, Product Management, Data Center Systems at Western Digital, to learn more about SSDs and how they fit into current business environments and data centers.

What features do SSDs need to have in order to offer uncompromised performance for the most demanding servers running mission-critical workloads in enterprise environments? What are some of the misconceptions IT leaders are facing when choosing SSDs?

First, IT leaders must understand environmental considerations, including the application, use case and its intended workload, before committing to specific SSDs. It’s well understood that uncompromised performance is paramount to support mission critical workloads in the enterprise environment. However, performance has different meanings to different customers for their respective use cases and available infrastructure.

Uncompromised performance may focus more on latency (and associated consistency), IOPs (and queue depth) or throughput (and block size) depending on the use case and application.

Additionally, the scale of the application and solution dictate the level of emphasis, whether it be interface-, device-, or system-level performance. Similarly, mission-critical workloads may have different expectations or requirements e.g. high availability support, disaster recovery, or performance and performance consistency. This is where IT leaders need to rationalize and test the best fit for their use case.

Today there are many different SSD segments that fit certain types of infrastructure choices and use cases. For example, PCIe SSD options are available from boot drives to performance NVMe SSDs and they come in different form factors such as M.2 (ultra- light and thin) and U.2 (standard 2.5-inch) to name a few. It’s also important to consider power/performance. Some applications do not require interface saturation, and can leverage low-power, single-port mainstream SSDs instead of dual-port, high-power, higher-endurance and higher-performance drives.

IT managers have choices today, which they should consider carefully to rationalize, optimize, infrastructure elasticity and scaling, test and ultimately align their future system architecture strategies when it comes to choosing the best fit SSD. My final word of advice: Sometimes it is not wise to pick the highest performing SSD available on the market as you do not want to pay for a rocket engine for a bike. Understanding the use case and success metrics – e.g., price-capacity, latency, price performance (either $/IOPs or $/GB/sec) – will help eliminate some of the misconceptions IT leaders face when choosing SSDs.

How has the pandemic accelerated cloud adoption and how has that translated to digital transformation efforts and the creation of agile data infrastructures?

The rapid increase in our global online footprint is stressing IT infrastructure from virtual office, live video calls, online classes, healthcare services and content streaming to social media, instant messaging services, gaming and e-commerce. This is the new normal of our personal and professional lives. There is no doubt that the pandemic has increased dependence on cloud data centers and services. Private, public and hybrid cloud use cases will continue to co-exist due to costs, data governance and strategies, security and legacy application support.

Digital transformation continues all around us, and the pandemic accelerated these initiatives. Before the pandemic, digital transformation projects generally spanned over several years with lengthy and exhaustive cycles to go online and scale up their web foot print. However, 2020 has really surprised all of us. Tectonic shifts have happened (and are still happening) with projects now taking only weeks or months even for businesses that are learning to scale up for the first time.

This infrastructure stress will further accelerate technological shifts at as well, whether it be from SAS to NVMe at the endpoints or from DAS- or SAN-based solutions to NVMe over Fabrics (NVMe-oF) based solutions to deliver greater agility to meet both dynamic and unforeseen demands of the future.

Organizations are scrambling to update their infrastructure, and many are battling inefficient data silos and large operational expenses. How can data centers take full advantage of modern NVMe SSDs?

NVMe SSDs are playing a pivotal role in making the new reality possible for the people and businesses around the world. As users transition from SAS and SATA, NVMe is not only increasing overall system performance and utilization, it’s creating next-generation flexible and agile IT infrastructure as well. Capitalizing on the power of NVMe, SSDs now enable data centers to run more services on their hardware i.e., improved utilization. This is an important consideration for IT leaders and organizations who are looking to improve efficiencies.

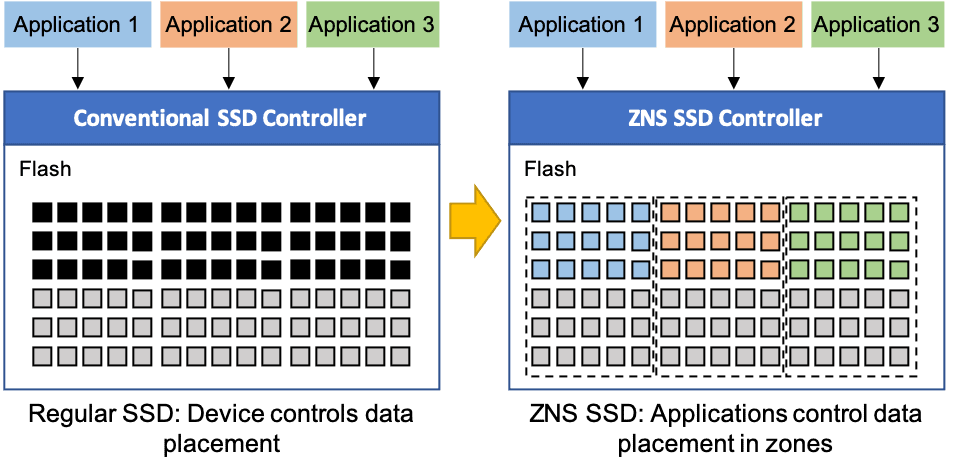

NVMe SSDs are helping both public and private cloud infrastructures in various areas such as the highest performance storage, the lowest latency interface and the flexibility to support needs from boot to high-performance compute as well as infrastructure productivity. NVMe supports enterprise specifications for server and storage systems such as namespaces, virtualization support, scatter gather list, reservations, fused operations, and emerging technologies such as Zoned Namespaces (ZNS).

Additionally, NVMe-oF extends the benefits of NVMe technology and enables sharing data between hosts and NVMe-based platforms over a fabric. The ratification of the NVMe 1.4 and NVMe-oF 1.1 specifications, with the addition of ZNS, have further strengthened NVMe’s position in enterprise data centers. Therefore, by introducing NVMe SSDs into their infrastructure, organizations will have the tools to get more from their data assets.

What kind of demand for faster hardware do you expect in the next five years?

Now and into the future, data centers of all shapes and sizes are constantly striving to achieve greater scalability, efficiencies and increased productivity and responsiveness with the best TCO. Business leaders and IT decision-makers must understand and navigate through the complexities of cloud, edge and hybrid on-prem data center technologies and architectures, which are increasingly being relied upon to support a growing and complex ecosystem of workloads, applications and AI/IoT datasets.

More than a decade ago, IT systems used to rely on software running on dedicated general purpose systems for any applications. This created many inefficiencies and scaling challenges, especially with large scale system designs. Today, data dependence has been consistently and exponentially growing, which has forced data center architects to decouple the applications from the systems. This was the birth of the HCI market and now the composable disaggregated infrastructure market.

Next-generation infrastructures are moving to disaggregated, pooled resources (e.g., compute, accelerators and storage) that can be dynamically composed to meet the ever increasing and somewhat unpredictable demands of the future. All of this allows us to make efficient use of hardware to increase infrastructure agility, scalability and software control, remove various system bottlenecks and improve overall TCO.

from Help Net Security https://ift.tt/2BKARq4

0 comments:

Post a Comment